| Uploader: | Ramaer |

| Date Added: | 01.12.2015 |

| File Size: | 13.85 Mb |

| Operating Systems: | Windows NT/2000/XP/2003/2003/7/8/10 MacOS 10/X |

| Downloads: | 41813 |

| Price: | Free* [*Free Regsitration Required] |

Amazon S3 - Downloading and Uploading to Buckets using Python Boto3

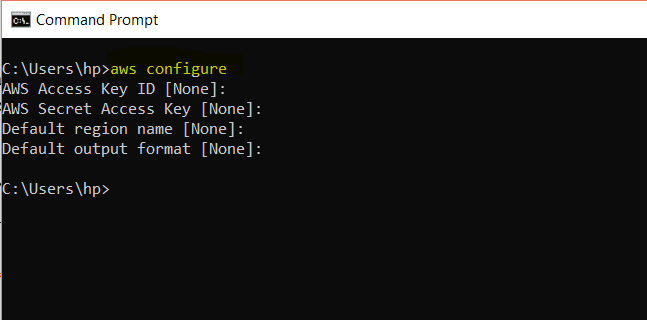

Download S3 File Using Boto3. Ask Question Asked 3 months ago. Active 3 months ago. Viewed 73 times 1. I'm currently writing a script in where I need to download S3 files to a created directory. I currently create a boto3 session with credentials, create a boto3 resource from that session, then use it to query and download from my s3 location. Boto3 is the Amazon Web Services (AWS) Software Development Kit (SDK) for Python, which allows Python developers to write software that makes use of services like Amazon S3 and Amazon EC2. You can find the latest, most up to date, documentation at our doc site, including a . The methods provided by the AWS SDK for Python to download files are similar to those provided to upload files. The download_file method accepts the names of the bucket and object to download and the filename to save the file to. import boto3 s3 = boto3. client ('s3') s3. download_file ('BUCKET_NAME', 'OBJECT_NAME', 'FILE_NAME').

Boto3 download s3 file

By using our site, you acknowledge that you have read and understand our Cookie PolicyPrivacy Policyboto3 download s3 file, and our Terms of Service.

Stack Overflow for Teams is a private, secure spot for you and your coworkers to find and share information.

I'm using boto3 to get files from s3 bucket. I need a similar boto3 download s3 file like aws s3 sync. This is working fine, as long as the bucket has only files. If a folder is present boto3 download s3 file the bucket, its throwing an error. This solution first compiles a list of objects then iteratively creates the specified directories and downloads the existing objects. To maintain the appearance of directories, path names are stored as part of the object Key filename.

For example:. You could either truncate the filename to only save the. Note that it could be multi-level nested directories. Boto3 download s3 file, it does the job, I'm not sure its good to do this way. I'm leaving it here to help other users and further answers, with better manner of achieving this. Better late than never: The previous answer with paginator boto3 download s3 file really good.

However it is recursive, and you might end up hitting Python's recursion limits. Here's an alternate approach, with a couple of extra checks, boto3 download s3 file. If you want you can change the directory, boto3 download s3 file. If you want to call a bash script using python, here is a simple method to load a file from a folder in S3 bucket to a local folder in a Linux machine :.

I got the similar requirement and got help from reading few of the above solutions and across other websites, I have came up with below script, Just wanted to share if it might help anyone. Learn more. Boto3 to download all files from a S3 Bucket Ask Question, boto3 download s3 file. Asked 4 years, 6 months ago. Active 3 months ago.

Viewed 91k times. I need a similar functionality like aws s3 sync My current code is! If a folder is present inside the bucket, its throwing an error Traceback most recent call last : File ". How to download folders. John Rotenstein k 8 8 gold badges silver badges bronze badges. Shan Shan 1, 1 1 gold badge 11 11 silver badges boto3 download s3 file 27 bronze badges.

See stackoverflow. Grant Langseth Grant Langseth 5 5 silver badges 4 4 bronze boto3 download s3 file. If you paste the last response you get from using the boto3 API whatever is stored in the response variable then I think it will be more clear what is happening in your specific case. Did you check that?

Note that you would need minor changes to make it work with Digital Ocean. Dec 12 '19 at I have the same needs and created the following function that download recursively the files. The directories are created locally only if they contain files.

Hack-R I don't think you need to create a resource and a client. I believe a client is always available on the resource.

You boto3 download s3 file just use resource. Isn't there an equivalent of aws-cli command aws s3 sync available in boto3 library? What is dist here? It is a flat file structure. Alexis Wilke John Rotenstein John Rotenstein k 8 8 gold badges silver badges bronze badges.

But i needed the folder to be created, automatically just like aws s3 sync. Is it possible in boto3. You would have to include the creation of a directory as part of your Python code. It is not an automatic capability of boto. Here, the content of the S3 bucket is dynamic, so i have to check s3. Ben Please start a new Question rather than asking a question as a comment on an old question.

Joe Haddad 3 3 silver badges 8 8 bronze badges. Tushar Niras Tushar Niras 1, 1 1 gold badge boto3 download s3 file 14 silver badges 16 16 bronze badges. Clean and simple, any reason why not to use this? It's much more understandable than all the other solutions. Collections seem to do a lot of things for you in the background. Am I missing something or does this assuming you know the file name in file. What if I just want to sync whatever is in the "directory" without knowing all the file names?

I guess you should first create all subfolders in order to have this working properly. This code will put everything in the top-level output directory regardless of how deeply nested it is in S3, boto3 download s3 file.

And if multiple files have the same name in different directories, it will stomp on one with another. I think you need one more line: os. I'm currently achieving the task, by using the following! If not it created them. Got KeyError: 'Contents'. Install awscli as python lib: pip install awscli Then define this function: from awscli.

UTF' os. I'm using this code but have an issue where all the debug logs are showing, boto3 download s3 file. I have this declared globally: logging. Any ideas? It is a very bad idea to get all files in one go, you should rather get it in batches.

Ganatra Ganatra 4, 2 2 gold badges 12 12 silver badges 15 15 bronze badges. Rajesh Rajendran Rajesh Rajendran 2 2 silver badges 13 13 bronze badges. List only new files that do not exist in local folder to not copy everything! HazimoRa3d HazimoRa3d 2 2 silver badges 8 8 bronze badges. Kranti Kranti 21 4 4 bronze badges. Sign up or log in Sign up using Google. Sign up using Facebook. Sign up using Email and Password. Post as a guest Name.

Email Required, but never shown. Featured on Meta. The Q1 Community Roadmap is on the Blog. What is the mission of Meta, as a community? Linked Related Hot Network Questions.

Question feed. Stack Overflow works best with JavaScript enabled.

Uploading Files to S3 in Python Using Boto3

, time: 8:14Boto3 download s3 file

Both upload_file and upload_fileobj accept an optional ExtraArgs parameter that can be used for various purposes. The list of valid ExtraArgs settings is specified in the ALLOWED_UPLOAD_ARGS attribute of the S3Transfer object at blogger.comD_UPLOAD_ARGS. The following ExtraArgs setting specifies metadata to attach to the. Download an object from S3 to a file-like object. Callback (function) -- A method which takes a number of bytes transferred to be periodically called during the download. Config (blogger.comerConfig) -- The transfer configuration to be used when performing the download. generate_presigned_post. Using Boto3, the python script downloads files from an S3 bucket to read them and write the contents of the downloaded files to a file called blogger.com What my question is, how would it work the same way once the script gets on an AWS Lambda function?

No comments:

Post a Comment